Towards Exascale Computing: European DEEP-EST research project

The DEEP-EST ("Dynamical Exascale Entry Platform - Extreme Scale Technologies") project is funded as part of the European Commission's Horizon 2020 ambitious Future and Emerging Technologies (FET) programme in order to create the blueprints of the next generation ("pre-exascale") supercomputer hardware and software.

The current goal in supercomputing is to reach exascale performance: a quintillion in American culture or a trillion in European culture or 10 to the power of 18 floating point arithmetic operations per second (FLOPS). These are needed to drive large-scale scientific simulations and big data analytics forward. Current supercomputers are able to achieve 0.2 exaFLOPS (or 200 petaFLOPS or 200 thousand teraFLOPS) (for comparison: if you have a very high-end personal computer, it's CPU can maybe compute half a teraFLOP).

Exascale computing is some sort of "wall", i.e. it is hard to reach it and in particular to go beyond anytime soon. While according Moore's law the number of transistors in a CPU doubles every two years, the performance of a CPU does not anymore double that fast (the transistors go into more cores and more caches). Currently, the only way to boost performance is to use not generic CPUs, but specialised "accelerators", e.g. graphical processors (GPUs), but also accelerators in other parts of a supercomputer, e.g. the network fabric that inter-connects the many CPU nodes of a supercomputer or the storage. DEEP-EST therefore suggest a Modular Supercomputing Architecture (MSA) where the supercomputer is composed of multiple modules, each being specialised in a particular domain, e.g. a GPU-heavy booster for computations that scale well and are suitable for GPUs, a "normal" CPU cluster module for applications that do not scale that well, a data analysis module having hardware specialised for machine learning.

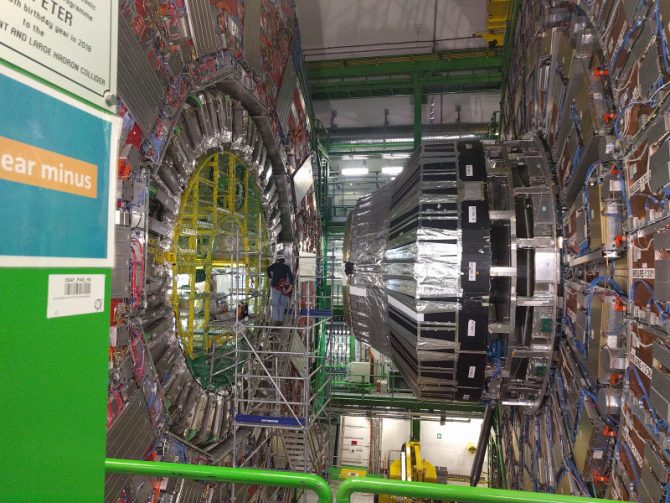

Talking about accelerators: one of our project partners is CERN and the project meeting took place there: we were lucky enough that the Large Hadron Collider (LHC) and particle accelerator is currently in maintenance/upgrade phase, so we where able to see one of the detectors (when it is running, the collisions create lots of radiation). -- Find the human in the picture below:

DEEP-EST has reached the middle of the project duration and the first module, the CPU cluster module has been installed. Since an additional barrier in exascale computing is energy, which also means heat created by the computers that need to be cooled down, DEEP-EST is also working on novel cooling solutions, e.g. water cooling. While typical data centres use air cooling, i.e. extra energy is needed to cool down air that is then blown into the racks, the DEEP-EST water cooling allows to use water at normal temperatures and pipe it through those components that create most of the heat. This will warm up the water and the energy contained in this warm water can then be even used for something else. I.e. instead of needing extra energy from cooling, the DEEP-EST warm water cooling allows to even gain energy (of course, this is energy inserted in the system by the electrical power that the supercomputing components consume). You see the water pipes of the newly installed CPU cluster module in the middle rack below:

Talking about energy efficiency: another trend are field-programmable gate arrays (FPGAs) that are more energy efficient than CPUs or GPUs. These are as well used in one of the specialised DEEP-EST modules.

The downside of the usage of accelerators is that they need special programming. University of Iceland is as DEEP-EST member developing machine learning software that exploits the DEEP-EST Modular Supercomputing Architecture (MSA) as good as possible. This includes clustering (DBSCAN) and classification via Support Vector Machines (SVMs) and Deep Learning/Deep Neural Networks.

You can follow the progress this project on the DEEP-EST web site and Twitter channel.