University of Iceland was successful in a consortium applying for funding from the European Horizon 2020 research programme with the European Open Science Cloud (EOSC)-centric proposal EOSC-Nordic.

EOSC-Nordic aims to foster and advance the take-up of the European Open Science Cloud (EOSC) at the Nordic level by coordinating the EOSC-relevant initiatives taking place in Finland, Sweden, Norway, Denmark, Iceland, Estonia, Latvia, Lithuania, Netherlands and Germany. EOSC-Nordic aims to facilitate the coordination of EOSC relevant initiatives within the Nordic and Baltic countries and exploit synergies to achieve greater harmonisation at policy and service provisioning across these countries, in compliance with EOSC agreed standards and practices. By doing so, the project will seek to establish the Nordic and Baltic countries as frontrunners in the take-up of the EOSC concept, principles and approach. EOSC-Nordic brings together a strong consortium including e-Infrastructure providers, research performing organisations and expert networks, with national mandates with regards to the provision of research services and open science policy, and wide experience of engaging with the research community and mobilising national governments, funding agencies, international bodies and global initiatives and high-level experts on EOSC strategic matters.

A successful EOSC-Nordic will reinforce Nordic research area capability and competitiveness, create a profile of a leading knowledge based region, increase the ability of the region to attract talent and investments, enhance its appeal as a partner in cooperation, and strengthen the Nordic region and its efforts in the overall EOSC, through the creation of a cross-border cooperation model for Europe.

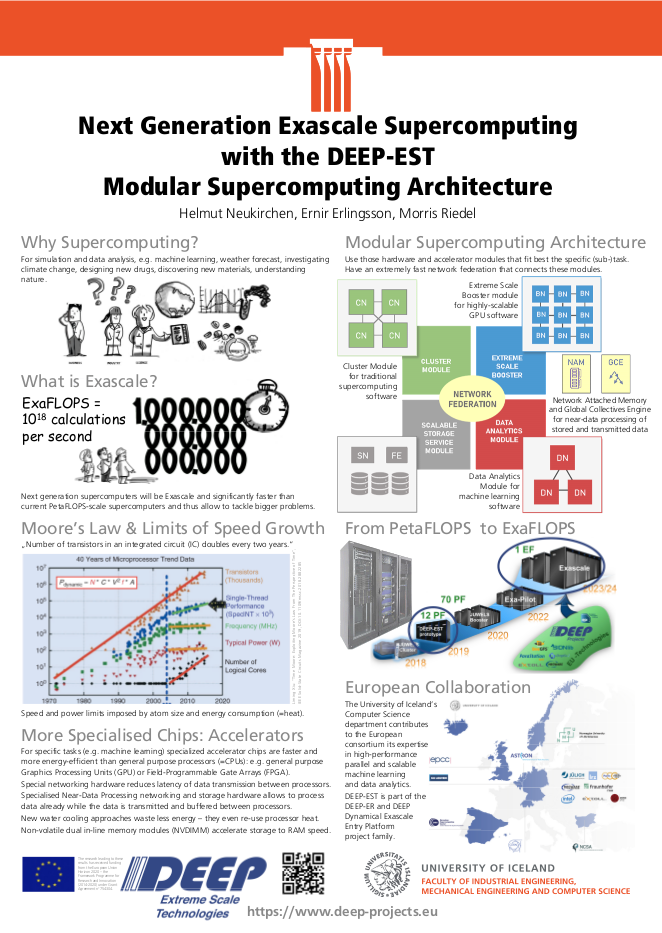

The project is coordinated by the Nordic e-Infrastructure Collaboration (NeIC) and the University of Iceland is one of the project participants. The University of Iceland's diverse team is lead by Ebba Þóra Hvannberg. Helmut Neukirchen and Morris Riedel contribute their knowledge with respect to e-Science, such as scalable, parallel machine learning, scientific workflows, and data federation. In addition to these researchers from the University's Computer Science department, experts from other departments of the University of Iceland contribute to EOSC-Nordic.

Project duration is 1st of September 2019 to 31st of August 2022. More information can be found on the EOSC-Nordic web page and also on my local page covering this research project.