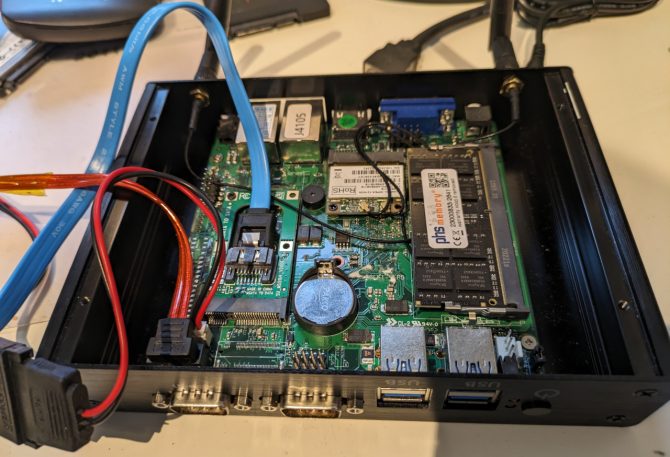

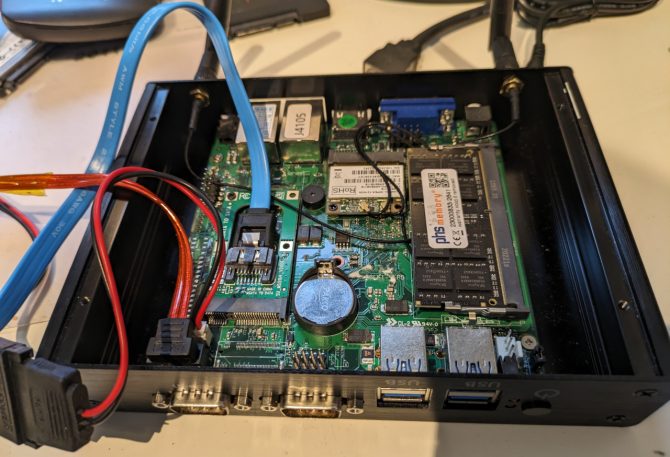

While I intended to use a Raspberry Pi 4 as a small server, I also ordered from China a small system (BEBEPC, comparable to the Qotom mini PCs. While Qotom mini PCs are slightly better documented, they typically have less powerful CPUs: even though they have Core i3 CPUs, these are so old that a more recent Celeron CPU is faster) based on a Intel J4105 CPU (= TDP of 10 W, 4 cores, 1.5 GHz base frequency, 2.5 GHz burst frequency) which has over the Raspi the advantage of native SATA ports (one standard SATA connector with 5 V power supply and one mSATA connector carrying 3.3 V power supply -- I ordered an mSATA to SATA adapter and a 5 V SATA power splitter cable to be able to have a RAID system of two SATA SSDs -- mSATA SSDs are only available in smaller sizes. But note that the SATA ports are only 3 Gbit/s, i.e. 300 MB/s, which means that a 500 MB/s SSD is already overkill). However, the biggest advantage is that it is (obviously) able to run Intel-only code, e.g. in particular Virtual Machine images or containers only available for Intel, e.g., using Proxmox VE.

Both systems come with 8 GB LPDDR4 RAM, but for the J4905, even 16 GB are possible (see below).

Both systems can be passively cooled: for the Raspy, I used a cooling case from (Update 2024: the now defunct) https://www.coolingcases.com/ -- it cools well, but the metal affects the range of the onboard Wifi (not that relevant for a server). The J4105 came as well with a case that allows passive cooling -- while it is still tiny for a PC, it has approx. 4 times the volume of the Raspi.

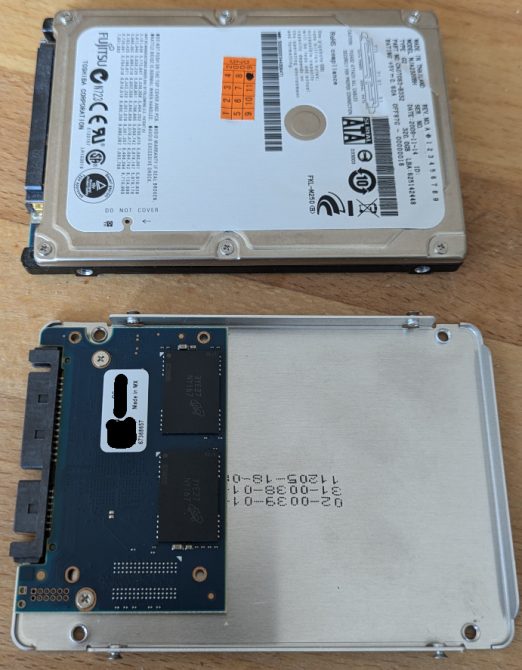

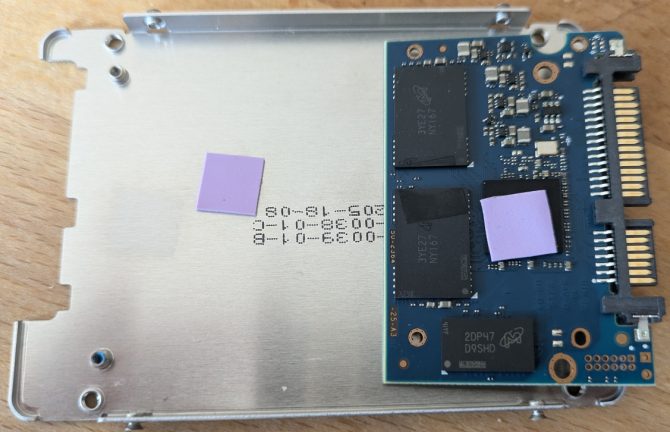

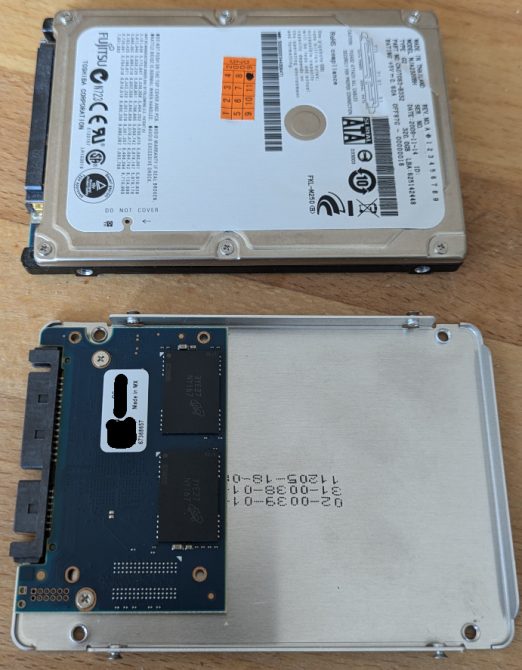

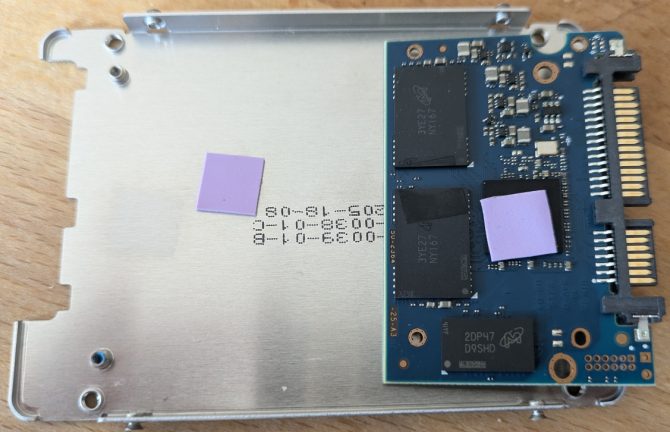

As you can see in the above photos, squeezing to SATA SSDs (for RAID) was a challenge and I had to bend the connector cables very tightly (which might not be healthy for the connectors, cables, PCBs). Therefore, I had considered removing the 2.5" case from the SATA SSDs and using only the bare PCBs. But as you can see in the photos below, there were pads glued to the SSD controller chip and I am not sure whether these are thermal pads and the metal case is needed as heat sink. Hence, I decided to keep using the case. (On the other hand, the second pad is only marginally covering the two NAND chips and the NAND chips on the second side of the PCBs are not padded at all -- so maybe, the pads are just mechanical to prevent rattling.)

SSD case open (HDD for comparison)

SSD PCB with pads (are these thermal pads?)

The J4105 system has for sure more compute power than the Raspi's ARM CPU, so the remaining question is the power consumption. Hence, I did some tests and measurements using a cheap power meter that claims to have a 2% precision. Both systems were connected at FullHD via HDMI to a monitor.

Intel J4105 measurements

As at the beginning, I did not had installed Linux yet, it was running Windows 10 and idle refers to having only the built-in task manager running in foreground (to display clock frequency) and all the background services that Windows 10 has by default. CPU load was generated using a batch file containing an endless loop.

The J4105 clocks down to 0.78 GHz when idle and the power consumption of the whole system (with one mSATA and one SATA SSD) is then 3.8 W.

With 1 core being busy, it still clocks up to 2.4 GHz and consumes 7.2 W.

With 2 cores being busy, it still clocks up to 2.4 GHz and consumes 10.3 W.

With 3 cores being busy, it clocks up to 2.35 GHz and consumes between 11.8 W and 12.1 W.

With 4 cores being busy, it clocks up to 2.19 GHz and consumes between 11.4 W and 12.0 W. (So it seems the reduced clock saves power).

I did run it with 4 cores being busy for an hour, and the measurements did not change, e.g. no thermal throttling seems to have occurred (nor did the case get hot, so a really good passive cooling -- or the contact between CPU and case is bad, but then thermal throttling could have been expected).

Raspberry Pi 4 measurements

I had OSMC with KODI running, but nothing else, i.e. the KODI UI being idle, but all the background services running. The latest firmware as of 4. June 2021 was used, storage was SDHC card only. CPU load was generated using the stress command.

The Raspberry Pi 4 consumed idle 3.8 W to 4.0 W.

With 1 core being busy, it consumes 4.5 W.

With 2 cores being busy, it consumes 5.0 W.

With 3 cores being busy, it consumes between 5.4 W and 5.5 W.

With 4 cores being busy, it consumes 6.0 W.

Temperature with the cooling case from https://www.coolingcases.com/ was approx. 52° C (so it prevented thermal throttling that would start at 80° C). Surprisingly, even in idle mode, the temperature was 40-42° (the tiny case does feel much warmer than the bigger case of the Intel system -- so, it seems: size matters).

Conclusions

In summary, the idle power consumption of both systems is comparable and while the busy consumption is lower with Raspberry Pi 4, it is of course less powerful than the J4105 system. For the J4105, I never observed the full 2.5 GHz burst clock rate (but 2.4 GHz). Even though the CPU TDP is 10 W, the whole system consumed up to 12.1 W (e.g. the RAM, the two SSDs, WiFi, HDMI output, external power supply, etc. probably also to add their share -- during boot, I even saw 14.8 W).

Note that others suggest 2.7 W idle for the Raspi 4 (but seems to require switching off a lot of I/O, e.g., HDMI etc. -- which I did not do, nor did I minimise background processes) or even as low as 2.1 W. On the other hand, many other report that they neither (with either a fan or a heatsink) get the system cooler than 42° in idle, so getting the Raspy warmer than the touch of your hand seems to be normal, but the J4105 system with the bigger case was considerably cooler.

It seems that the J4105 is a good 24/7 home server system, i.e. more powerful than the Raspi when needed, but still not consuming more power when idle. (A German c't article confirms this for a thin client that is also J4105-based.)

The ultimate (at time of writing) passively cooled server with ECC ram would be ASRock Industrial iBOX-V2000M or iBOX-V2000V -- but these are not available for private users. But any ASRock motherboard in general, together with AMD Pro CPUs should support ECC.

Some documentation on the BEBEPC system

RAM: 16 GB DIMMs supported

An even more powerful system based on J4125 (= J4105 with higher clock) suggests that with Dual-Rank-Modules even 16 GB per RAM module are possible, i.e. with two banks, even 32 GB of RAM. Power consumption of that J412-based system has also been measured which is higher (best explained by the fact that it is faster, i.e. cannot clock down as much: 2000-2700 MHz vs. 1500-2500 MHz).

I therefore ordered a 16 GB DIMM: I can confirm that this works. However, my system has just 1 RAM socket, so 16 GB is the maximum.

Auto power on

It seems that to make the system automatically power-on after a power outage, a jumper needs to be set at PWRON1 at the pins marked PWR_SW1.

Independent from that, the system does not start after having been powered off -- not even after the power button has been pressed: in this case, the RTC/CMOS battery needs to be removed and inserted again.

BIOS settings

F11 or DEL to enter the AMI BIOS.

F2 to select boot drive.

MAC address can be found via Advanced.

Change OS to Linux via Chipset-South Bridge.

Change SATA Device Type to SSD via Chipset-South Cluster Configuration (not sure whether Mechanical Presence Switch setting matters and needs to be disabled).

Not sure about DITO (the time a given port must be idle before HW may enter DevSleep autonomously): might help if SSD gets hot/consumes to much energy.

Chipset-Miscellaneous Configuration: Power Button Debounce Mode disable to make the power button to come back from standby mode.

Security-Secure Boot: Disable if booting Linux causes problems.

Security-Quiet Boot: Disable to see some BIOS messages at boot.

Boot: Change order of boot devices.

US keyboard

On German/Icelandic keyboards, the pipe symbol is left of the enter key if US keyboard layout is assumed.

Update 2023: Intel N100, N200 and N300/N305 Alder Lake N CPUs

The Intel N100, N200 and N300/N305 CPUs are some sort of successor of the J4105 CPU. N100 and N200 have both 4 cores and N200 can clock higher and has a better GPU than N100. The numbering is a mess (e.g. N95 is faster than N100), as a rule of thumb: those CPUs ending with the digit 5 are allowed to get hotter (i.e. higher TDP), i.e. they can probably sustain longer using all cores at highest speed. But I do not know what the reason for the different TPD is (are the different TDPs the same silicon die, but base on whether they can handle higher temperature they get binned into the digit 5 CPUs?). N300/N305 has 8 cores and also marketed as "Core i3". While they support only one DIMM (i.e. single channel being slower than two memory channels), they support DDR5 RAM which is anyway 50% faster.

If the BIOS supports it, these system even allow ECC, see my post on the iKOOLCORE R2 for more details on the ECC. Even if the BIOS does not support enabling IBECC, there are claims that using the AMISCE tool from AMI, you can set this from command line (and then reboot -- just take care that you can clear the CMOS/NVRAM or have some rescue mode), e.g. on Linux, this is the SCELNX_64 / scelnx tool, but maybe the uefisettings tool works as well?

That Chinese Intel i3-N305 fanless mini PCs look also good, but you never know what backdoors are int the BIOS. The Protectli systems have coreboot, but are more expensive and have outdated hardware, i.e. none of these new processors. Starlabs Byte has an N200 with coreboot, but DDR4 RAM only. The official maximum RAM is 16 GB according to Intel, but there are systems offered with 32 GB:

- TerraMaster F4-424 Pro NAS with N300 and 32 GB RAM. (While Terramaster is Chinese, it might be interesting to see when the non-Chinese QNAP and Synology offer Alder Lake N-based systems, but in contrast to TerraMaster, installing your own Linux, e.g. TrueNas Scale, is not well supported.)

- CWWK Magic Computer with various CPUs and RAM configuration and it has even a PCIe socket

- iKoolCore R2 it has a fan but is super tiny. For details, including getting ECC to run on Linux, see my blog post on the iKOOLCORE R2.

- ODROID H4 from Hardkernel which is South-Korean, so no China BIOS -- hence the ODROID H4 sounds a very good buy and also the support provided by Hardkernel seems to be good. But it does not come with a decent case. If you sacrifice the NVM SSD, you can use the NVM port (which is in fact just PCIe) to add 4 further 2.5 Gb Ethernet ports, making it a great router, however you then either need to use the slow eMMC or the SATA ports for storage.

Note that most of the above come with Intel Ethernet Intel i226 chips that have a good driver support in Linux and BSD, however there are claims that these chips crash after a couple of hours and the only way to prevent this is to switch of PCIe power saving (ASPM) -- on the other hand, you find people reporting their n100 systems with i226 running rock-solid.

In general, these new CPUs are slightly faster than, e.g., a 8 core C3758 Xeon-like Atom CPU that is three years older, but supports more RAM and even ECC. But these new CPUs are more I/O limited (in terms of PCI lanes) in comparison to that 8 core C3758 Xeon-like Atom CPU that has 25 W but can still be passively cooled.

A test of an Intel N200-based fanless mini from Asus with DDR4 RAM mentions that N200 with DDR5 RAM is faster. Idle power consumption is claimed to be 5-6 W and 22 W under full load.

Others show for a N305 system idling 15-16 W which is significantly more. (But the N305 has 15 W TDP vs. 7 W for the N300 -- otherwise, both CPUs are exactly the same; I guess, the N300 will simple start to throttle when stressing all cores. But the N305 can also be restricted via BIOS to a lower TDP.) There, you find also a performance comparison with a Raspberry Pi 4 and Pi 5: N100 is twice as fast as a Pi 5 and four times faster than a Pi 4, and N305 is almost twice as fast as an N100.